While working on my project to set up a new cloud-native homelab, I faced the challenge of provisioning Proxmox VE virtual machines with Fedora CoreOS. In order to achieve as much automation as possible, my goal was to have the full pipeline of provisioning steps automated through OpenTofu. One could also use Terraform here but that’s just a matter of taste - at least at the time of writing.

The general steps required to bootstrap a Fedora CoreOS machine are:

- Download the appropriate image file. For bare metal, this could be a simple live iso, but for Proxmox (which is based on KVM and QEMU) the developers provide a qcow2 disk image.

- Prepare a Butane config which will transform the vanilla OS into whatever you need.

- Transpile the Butane config to Ignition.

- Boot Fedora CoreOS with this Ignition config and watch the magic happen.

To automate this, one could create a simple shell script that calls the required tools like wget and butane but this approach breaks

my goal of doing everything within OpenTofu.

Defining the required OpenTofu providers Link to heading

Fortunately, there are already providers available that we can use in this project:

- bpg/proxmox connects OpenTofu to Proxmox

- poseidon/ct transpiles Butane to Ignition

We will also add hashicorp/null and hashicorp/local to work around some limitations of Proxmox later down the line. Please note

that the Proxmox provider requires some minimal configuration (address and credentials). I decided to only hardcode the address and pass

the credentials as environment variables.

# ./providers.tf

terraform {

required_providers {

proxmox = {

source = "bpg/proxmox"

version = "0.51.0"

}

ct = {

source = "poseidon/ct"

version = "0.13.0"

}

null = {

source = "hashicorp/null"

version = "3.2.2"

}

local = {

source = "hashicorp/local"

version = "2.5.1"

}

}

}

provider "proxmox" {

endpoint = "https://pve.domain.tld"

# If Proxmox uses a self-signed TLS certificate, ignore the unknown issuer

# insecure = true

# Some resources require SSH access to Proxmox.

ssh {

agent = true

}

}

Getting the disk image into Proxmox storage Link to heading

In order to boot Fedora CoreOS, we first need to find a way to make the disk image available to Proxmox. Remember that we want to implement it as much in OpenTofu as possible.

The bpg/proxmox provider has a resource that can leverage the Proxmox API to download a file right into a storage area but

unfortunately this API is very picky when it comes to file extensions, so the intuitive approach will not work:

# ./fedora-coreos-qcow.tf

resource "proxmox_virtual_environment_download_file" "coreos_qcow2" {

content_type = "iso"

datastore_id = "local"

node_name = "pve"

url = "https://builds.coreos.fedoraproject.org/prod/streams/stable/builds/39.20240322.3.1/x86_64/fedora-coreos-39.20240322.3.1-qemu.x86_64.qcow2.xz"

}

We receive the following error message:

Could not download file ‘fedora-coreos-39.20240322.3.1-qemu.x86_64.qcow2.xz’, unexpected error: error download file by URL: received an HTTP 400 response - Reason: Parameter verification failed. (filename: wrong file extension)

As it turns out, Proxmox can download disk images from some URL but only if the files are not compressed. Since the Fedora CoreOS

image is xz-compressed however, we will need to find another way to get the image file there. If we take a detour across our own

machine, we can use the proxmox_virtual_environment_file resource instead, but this requires us to have the file available locally.

After a quick discussion with the provider’s maintainer, we

agreed that using a local-exec provisioner seems to be the best workaround for now. So let’s replace our previous attempt with this

instead:

# ./fedora-coreos-qcow.tf

resource "null_resource" "coreos_qcow2" {

provisioner "local-exec" {

command = "mv $(coreos-installer download -p qemu -f qcow2.xz -s stable -a x86_64 -d) fedora-coreos.qcow2.img"

}

provisioner "local-exec" {

when = destroy

command = "rm -f fedora-coreos.qcow2.img"

}

}

resource "proxmox_virtual_environment_file" "coreos_qcow2" {

content_type = "iso"

datastore_id = "local"

node_name = "pve"

depends_on = [null_resource.coreos_qcow2]

source_file {

path = "fedora-coreos.qcow2.img"

}

}

In this example we are using the stable stream and the x86_64 architecture. Feel free to adapt this to you likings.

Please note that due to my use of local-exec, we need to have the coreos-installer

package installed locally. On Fedora, you can install it using dnf install coreos-installer. If the package is not available

on your distro, you can also use wget and unxz to download and unpack the image file manually. In this case you will need to

adapt the commands used.

Once we have the unpacked qcow2 image available locally, the proxmox_virtual_environment_file resource will transfer it to

Proxmox. It is important to use a content_type of iso here. While the API is a bit less picky here than in our first attempt,

it still validates the file extension, so we’re appending a “.img” suffix here.

Transpiling the Butane configuration Link to heading

The poseidon/ct provider allows us to perform the whole transpilation to Ignition within OpenTofu. As a bonus, we can even use

the templatefile function to apply some templating to our config!

Here’s a sample configuration with some light templating to pass in the hostname, the admin user name and the admin ssh public key. This configuration will also install the QEMU guest agent to provide proper integration with Proxmox. I would have expected the agent to be preinstalled in an image that is specifically create for QEMU but I’m also fine with installing it on my own.

# ./butane/amazing-vm.yaml.tftpl

variant: fcos

version: 1.5.0

passwd:

users:

- name: ${ssh_admin_username}

groups: ["wheel", "sudo", "systemd-journal"]

ssh_authorized_keys:

- ${ssh_admin_public_key}

storage:

files:

- path: /etc/hostname

mode: 0644

contents:

inline: ${hostname}

systemd:

units:

- name: "install-qemu-guest-agent.service"

enabled: true

contents: |

[Unit]

Description=Ensure qemu-guest-agent is installed

Wants=network-online.target

After=network-online.target

Before=zincati.service

ConditionPathExists=!/var/lib/%N.stamp

[Service]

Type=oneshot

RemainAfterExit=yes

ExecStart=rpm-ostree install --allow-inactive --assumeyes --reboot qemu-guest-agent

ExecStart=/bin/touch /var/lib/%N.stamp

[Install]

WantedBy=multi-user.target

Next, render the template as data in OpenTofu, passing in the template parameters:

# ./amazing-vm.tf

data "ct_config" "amazing_vm_ignition" {

strict = true

content = templatefile("butane/amazing-vm.yaml.tftpl", {

ssh_admin_username = "admin"

ssh_admin_public_key = "ssh-rsa ..."

hostname = "amazing-vm"

})

}

Creating a virtual machine in Proxmox Link to heading

Now that we have the building blocks for a Fedora CoreOS machine in place, we can finally create a virtual machine in Proxmox:

# ./amazing-vm.tf

# (...)

data "ct_config" "amazing_vm_ignition" {

strict = true

content = templatefile("butane/amazing-vm.yaml.tftpl", {

ssh_admin_username = "admin"

ssh_admin_public_key = "ssh-rsa ..."

hostname = "amazing-vm"

})

}

resource "proxmox_virtual_environment_vm" "amazing_vm" {

# This must be the name of your Proxmox node within Proxmox

node_name = "pve"

name = "amazing-vm"

description = "Managed by OpenTofu"

machine = "q35"

# Since we're installing the guest agent in our Butane config,

# we should enable it here for better integration with Proxmox

agent {

enabled = true

}

memory {

dedicated = 4096

}

# Here we're referencing the file we uploaded before. Proxmox will

# clone a new disk from it with the size we're defining.

disk {

interface = "virtio0"

datastore_id = "local"

file_id = proxmox_virtual_environment_file.coreos_qcow2.id

size = 32

}

# We need a network connection so that we can install the guest agent

network_device {

bridge = "vmbr0"

}

kvm_arguments = "-fw_cfg 'name=opt/com.coreos/config,string=${replace(data.ct_config.amazing_vm_ignition.rendered, ",", ",,")}'"

}

The kvm_arguments attribute might look a bit scary, so let’s break it down:

-fw_cfg instructs QEMU to create a QEMU Firmware Configuration Device

that we can use to inject our Ignition configuration into the virtual machine. Fedora CoreOS knows about this device

and will read the Ignition configuration from it if we use the name opt/com.coreos/config. The final string=... part passes

the rendered configuration from data.ct_config.amazing_vm_ignition. The rendered data goes through a replace call that

escapes all occurences of , with ,, because the comma is a reserved character in -fw_cfg that will break the parsing of

the argument and ultimately stop the virtual machine from starting. Trust me, I learned this the hard way.

Applying the resources Link to heading

With everything prepared, we can finally call tofu plan to see what is about to happen:

$ export PROXMOX_VE_USERNAME="username@realm"

$ export PROXMOX_VE_PASSWORD="incrediblysecure"

$ tofu plan

data.ct_config.amazing_vm_ignition: Reading...

data.ct_config.amazing_vm_ignition: Read complete after 0s [id=4085097665]

OpenTofu used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

OpenTofu will perform the following actions:

# null_resource.coreos_qcow2 will be created

+ resource "null_resource" "coreos_qcow2" {

+ id = (known after apply)

}

# proxmox_virtual_environment_file.coreos_qcow2 will be created

+ resource "proxmox_virtual_environment_file" "coreos_qcow2" {

+ content_type = "iso"

+ datastore_id = "local"

+ file_modification_date = (known after apply)

+ file_name = (known after apply)

+ file_size = (known after apply)

+ file_tag = (known after apply)

+ id = (known after apply)

+ node_name = "pve"

+ overwrite = true

+ timeout_upload = 1800

+ source_file {

+ changed = false

+ insecure = false

+ path = "fedora-coreos.qcow2.img"

}

}

# proxmox_virtual_environment_vm.amazing_vm will be created

+ resource "proxmox_virtual_environment_vm" "amazing_vm" {

+ acpi = true

+ bios = "seabios"

+ description = "Managed by OpenTofu"

+ id = (known after apply)

+ ipv4_addresses = (known after apply)

+ ipv6_addresses = (known after apply)

+ keyboard_layout = "en-us"

+ kvm_arguments = "-fw_cfg 'name=opt/com.coreos/config,string={\"ignition\":{\"config\":{\"replace\":{\"verification\":{}}},,\"proxy\":{},,\"security\":{\"tls\":{}},,\"timeouts\":{},,\"version\":\"3.4.0\"},,\"kernelArguments\":{},,\"passwd\":{\"users\":[{\"groups\":[\"wheel\",,\"sudo\",,\"systemd-journal\"],,\"name\":\"admin\",,\"sshAuthorizedKeys\":[\"ssh-rsa ...\"]}]},,\"storage\":{\"files\":[{\"group\":{},,\"path\":\"/etc/hostname\",,\"user\":{},,\"contents\":{\"compression\":\"\",,\"source\":\"data:,,amazing-vm\",,\"verification\":{}},,\"mode\":420}]},,\"systemd\":{\"units\":[{\"contents\":\"[Unit]\\nDescription=Ensure qemu-guest-agent is installed\\nWants=network-online.target\\nAfter=network-online.target\\nBefore=zincati.service\\nConditionPathExists=!/var/lib/%N.stamp\\n\\n[Service]\\nType=oneshot\\nRemainAfterExit=yes\\nExecStart=rpm-ostree install --allow-inactive --assumeyes --reboot qemu-guest-agent\\nExecStart=/bin/touch /var/lib/%N.stamp\\n\\n[Install]\\nWantedBy=multi-user.target\\n\",,\"enabled\":true,,\"name\":\"install-qemu-guest-agent.service\"}]}}'"

+ mac_addresses = (known after apply)

+ machine = "q35"

+ migrate = false

+ name = "amazing-vm"

+ network_interface_names = (known after apply)

+ node_name = "pve"

+ on_boot = true

+ protection = false

+ reboot = false

+ scsi_hardware = "virtio-scsi-pci"

+ started = true

+ stop_on_destroy = false

+ tablet_device = true

+ template = false

+ timeout_clone = 1800

+ timeout_create = 1800

+ timeout_migrate = 1800

+ timeout_move_disk = 1800

+ timeout_reboot = 1800

+ timeout_shutdown_vm = 1800

+ timeout_start_vm = 1800

+ timeout_stop_vm = 300

+ vm_id = (known after apply)

+ agent {

+ enabled = true

+ timeout = "15m"

+ trim = false

+ type = "virtio"

}

+ disk {

+ aio = "io_uring"

+ backup = true

+ cache = "none"

+ datastore_id = "local"

+ discard = "ignore"

+ file_format = (known after apply)

+ file_id = (known after apply)

+ interface = "virtio0"

+ iothread = false

+ path_in_datastore = (known after apply)

+ replicate = true

+ size = 32

+ ssd = false

}

}

Plan: 3 to add, 0 to change, 0 to destroy.

Use the plan to validate what is about to happen. Here, you have the chance to check you rendered ignition config, too.

If you like what you see, use tofu apply and watch your virtual machine being provisioned. If you receive an error,

double-check your Proxmox endpoint (it usually runs on port 8006) and your storage configuration. Yours might differ

from mine.

If all goes well, the new virtual machine will boot, run Ignition and go to the login screen. It will then silently install the QEMU guest agent and reboot. From what I have seen, the Proxmox provider will report the virtual machine being ready once the QEMU guest agent has connected. For me, this took about 3 minutes.

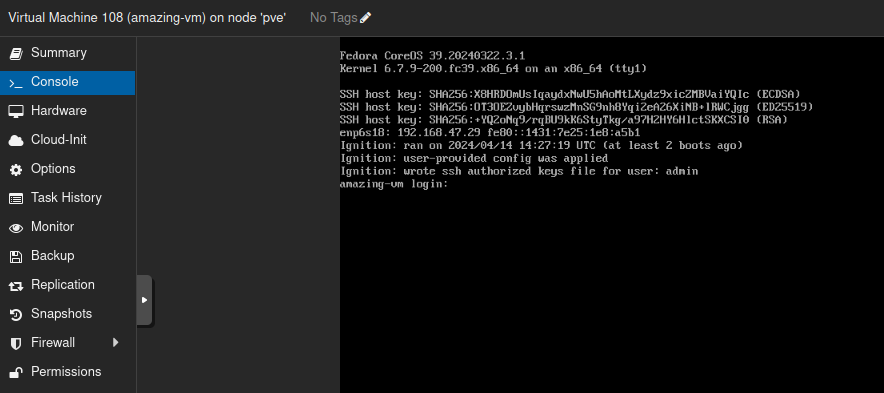

The fully-provisioned Fedora CoreOS VM

Note that it conforms to our specification: It has the correct hostname, network connectivity and our ssh public key.

I created a sample repository on GitHub that shows the full OpenTofu code for your reference. Feel free to open an issue there if something goes wrong and I will do my best to help you.

Troubleshooting Link to heading

Error: error creating VM: received an HTTP 500 response - Reason: only root can set ‘args’ config

In case you’re seeing this error message, make sure you are using the root user with password authentication instead of API tokens, i.e.

do not use the PROXMOX_VE_API_TOKEN environment variable. As of PVE 7.4, some Proxmox functionality is only available this way and

kvm_args seems to be such functionality. Of course this is not an ideal situation for security and I really hope this will change at

some point.

Building from here Link to heading

Creating Fedora CoreOS machines with OpenTofu opens up some really cool infrastructure automation possibilities. With just and few Butane configuration and some templating, we can spin up a whole fleet of Fedora CoreOS VMs that can fulfill all kinds of tasks in our environment: Why not create autoscaling Kubernetes clusters? Or maybe a few instances of HAProxy? Maybe GitLab runners? Everything is possble in a cloud-native world!

Happy hacking!